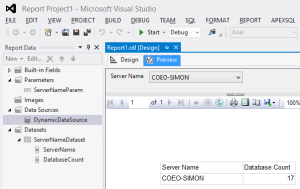

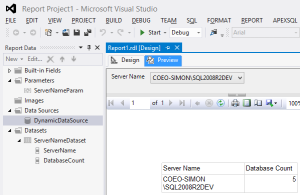

Recently I have been working with a client who has a production SQL Server instance that has ~2.5k databases on it. They are in the process of moving to a new SQL Serve cluster and are reviewing their other non-production environments too.

One of their major pain points at the is their pre-production refresh process, this involves restoring all 2.5k databases to a pre-production SQL Server instance. The current process involves retrieving backups from Iron Mountain and performing file system restores. They are looking to streamline this process and automate it as much as possible. Most of the process could be achieved using T-SQL, but there are extra file system tasks that need to be performed which are more easily achieved using PowerShell. PowerShell 4.0 brought us the Restore-SQLDatabase cmdlet and so it was worth evaluating this to see if I could encapsulate the entire thing in one script. It’s still a work in progress but here are some snippets from it.

Firstly, I should probably explain that their backups are all stored in a single network share in this structure:

\\BackupRoot\Database\Full\backup.bak

The objective is to restore the most recent full backup of each production database to the pre-production server.

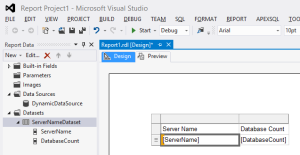

I’ve created some variables at the top of the script (real variable data removed):

$backupRoot = Get-ChildItem -Path "C:\Temp\Databases" $datafilesDest = "C:\Temp\DataFiles" $logfilesDest = "C:\Temp\LogFiles" $server = "SIMON-XPS13\SQL2014"

A description of the individual commands within the main body is included in the code below.

## For each folder in the backup root directory...

#

foreach($folder in $backupRoot)

{

# Get the most recent .bak files for all databases...

$backupFiles = Get-ChildItem -Path $folder.FullName -Filter "*.bak" -Recurse | Sort-Object -Property CreationTime -Descending | Select-Object -First 1

# For each .bak file...

foreach ($backupFile in $backupFiles)

{

# Restore the header to get the database name...

$query = "RESTORE HEADERONLY FROM DISK = N'"+$backupFile.FullName+"'"

$headerInfo = Invoke-Sqlcmd -ServerInstance $server -Query $query

$databaseName = $headerInfo.DatabaseName

# Restore the file list to get the logical filenames of the database files...

$query = "RESTORE FILELISTONLY FROM DISK = N'"+$backupFile.FullName+"'"

$files = Invoke-Sqlcmd -ServerInstance $server -Query $query

# Differentiate data files from log files...

$dataFile = $files | Where-Object -Property Type -EQ "D"

$logFile = $files | Where-Object -Property Type -EQ "L"

# Set some variables...

$dataFileName = $dataFile.LogicalName

$logFileName = $logFile.LogicalName

# Set the destination of the restored files...

$dataFileFullPath = $datafilesDest+"\"+$dataFileName+".mdf"

$logFileFullPath = $logfilesDest+"\"+$logFileName+".ldf"

# Create some "Relocate" file objects to pass to the Restore-SqlDatabase cmdlet...

$RelocateData = New-Object 'Microsoft.SqlServer.Management.Smo.RelocateFile, Microsoft.SqlServer.SmoExtended, Version=12.0.0.0, Culture=neutral, PublicKeyToken=89845dcd8080cc91' -ArgumentList $dataFileName, $dataFileFullPath

$RelocateLog = New-Object 'Microsoft.SqlServer.Management.Smo.RelocateFile, Microsoft.SqlServer.SmoExtended, Version=12.0.0.0, Culture=neutral, PublicKeyToken=89845dcd8080cc91' -ArgumentList $logFileName, $logFileFullPath

# Perform the database restore... and then go around the loop.

Restore-SqlDatabase -ServerInstance $server -Database $databaseName -BackupFile $backupFile.FullName -RelocateFile @($RelocateData,$RelocateLog) -ReplaceDatabase

}

}

With this script I was able to restore all 2.5k databases with relative ease. The great thing is that this can be run in just a few clicks and is much less arduous than the previous process. This script is unlikely to suit your exact use case but hopefully you can take from it what you need and tailor it to your environment.

If you have any questions, then please don’t hesitate to contact me.